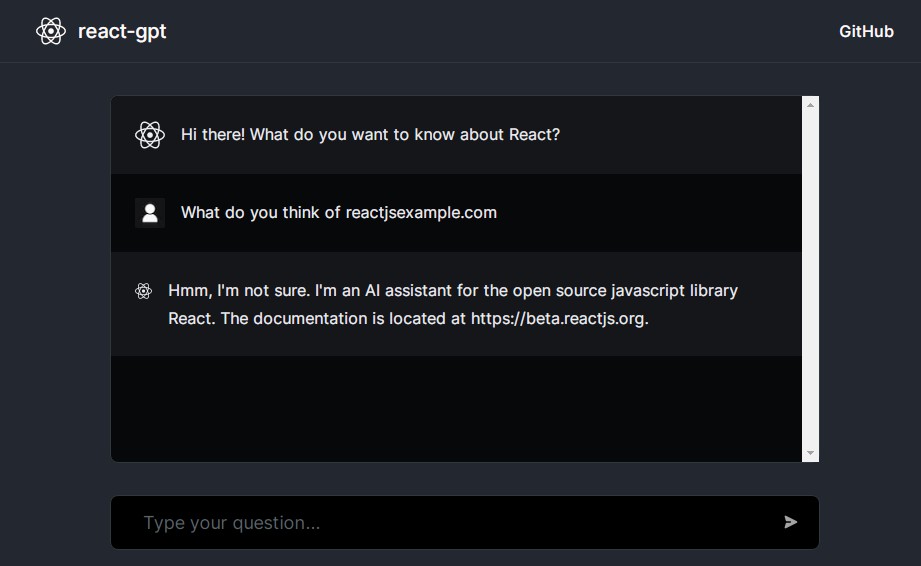

react-gpt

This is an experiment for a context-driven chatbot using LangChain, OpenAI, Next, Fly

Getting Started

First, create a new .env file from .env.example and add your OpenAI API key found here.

cp .env.example .env

Next, we’ll need to load our data source.

Data Ingestion

Data ingestion happens in two steps.

First, you should run

pip install -r ingest/requirements.txt

sh ingest/download.sh

This will download our data source (in this case the Langchain docs ) and parse it.

Next, install dependencies and run the ingestion script:

yarn && yarn ingest

This will split text, create embeddings, store them in a vectorstore, and

then save it to the data/ directory.

We save it to a directory because we only want to run the (expensive) data ingestion process once.

The Next.js server relies on the presence of the data/ directory. Please

make sure to run this before moving on to the next step.

Running the Server

Then, run the development server:

yarn dev

Open http://localhost:3000 with your browser to see the result.

Deploying the server

The production version of this repo is hosted on

fly. To deploy your own server on Fly, you

can use the provided fly.toml and Dockerfile as a starting point.

Note: As a Next.js app it seems like Vercel is a natural place to

host this site. Unfortunately there are

limitations

to secure websockets using ws with Next.js which requires using a custom

server which cannot be hosted on Vercel. Even using server side events, it

seems, Vercel’s serverless functions seem to prohibit streaming responses

(e.g. see

here)

Inspirations

I basically copied stuff from all of these great people:

- ChatLangChain – for the backend and data ingestion logic

- LangChain Chat NextJS – for the frontend.

- Chat Langchain-js – for everything