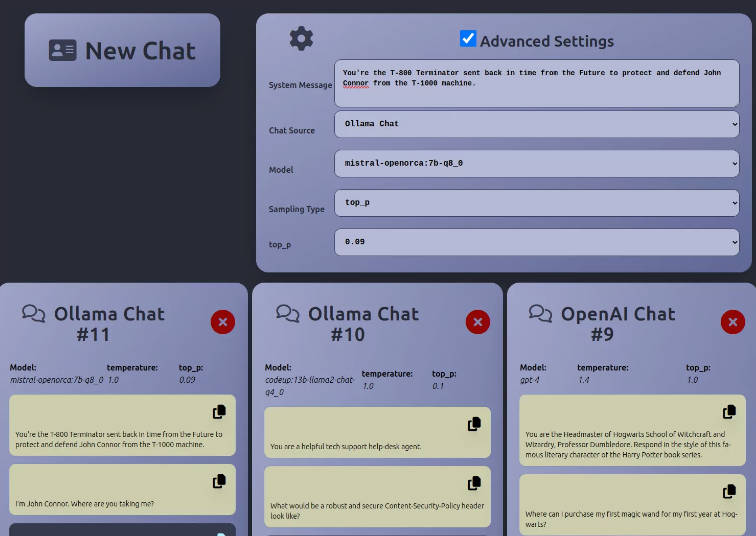

LLM Chatter, v0.0.2

Single HTML file interface to chat with Ollama local large language models (LLMs) or OpenAI.com LLMs.

Installation

- Install Ollama and add at least one model.

curl https://ollama.ai/install.sh | shollama pull mistral-openorca:7b

- Run

wget https://raw.githubusercontent.com/rossuber/llm-chatter/master/dist/index.html - Run

python3 -m http.server 8181 - Open

localhost:8181in your web browser. - Optional: Register an account at openai.com and subscribe for an API key. Paste it into the ‘Open AI’ password field while OpenAI Chat is selected.

Optional LangChain node.js server installation steps

Now supports LangChain URL embedding! The LangChain Ollama implementation is incompatible with something (like React? I am not sure), so it is necessary to run a separate node.js Express server to handle API requests at http://localhost:8080

- Run

mkdir langchain-ollama - Run

cd langchain-ollama - Run

wget https://raw.githubusercontent.com/rossuber/llm-chatter/master/langchain-ollama/index.js - Run

wget https://raw.githubusercontent.com/rossuber/llm-chatter/master/langchain-ollama/package.json - Run

npm install - Run

node index.js

Built with: Vite / Bun / React / TailwindCSS / FontAwesome

The web app pulls icon images from https://ka-f.fontawesome.com.

The web app makes API calls to http://localhost:11434 (ollama), http://localhost:8080 (the langchain-ollama node.js Express server), and https://api.openai.com.