Remix SEO

Collection of SEO utilities like sitemap, robots.txt, etc. for a Remix

Features

- Generate Sitemap

- Generate Robots.txt

Installation

To use it, install it from npm (or yarn):

npm install @balavishnuvj/remix-seo

Usage

For all miscellaneous routes in root like /robots.txt, /sitemap.xml. We can create a single function to handle all of them instead polluting our routes folder.

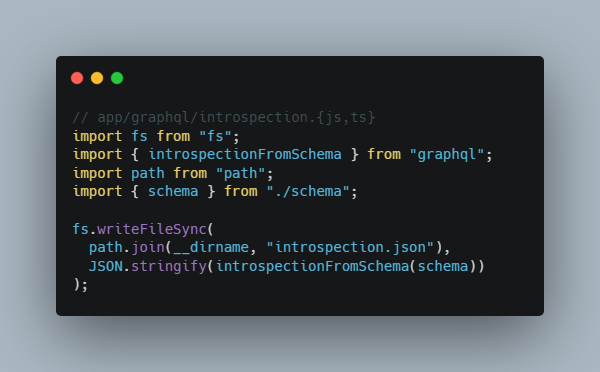

For that, lets create a file called otherRootRoutes.server.ts (file could be anything, make sure it is import only in server by ending with.server.{ts|js})

// otherRootRoutes.server.ts

import { EntryContext } from "remix";

type Handler = (

request: Request,

remixContext: EntryContext

) => Promise<Response | null> | null;

export const otherRootRoutes: Record<string, Handler> = {};

export const otherRootRouteHandlers: Array<Handler> = [

...Object.entries(otherRootRoutes).map(([path, handler]) => {

return (request: Request, remixContext: EntryContext) => {

if (new URL(request.url).pathname !== path) return null;

return handler(request, remixContext);

};

}),

];

and import this file in your entry.server.tsx

import { renderToString } from "react-dom/server";

import { RemixServer } from "remix";

import type { EntryContext } from "remix";

+import { otherRootRouteHandlers } from "./otherRootRoutes.server";

export default async function handleRequest(

request: Request,

responseStatusCode: number,

responseHeaders: Headers,

remixContext: EntryContext

) {

+ for (const handler of otherRootRouteHandlers) {

+ const otherRouteResponse = await handler(request, remixContext);

+ if (otherRouteResponse) return otherRouteResponse;

+ }

let markup = renderToString(

<RemixServer context={remixContext} url={request.url} />

);

responseHeaders.set("Content-Type", "text/html");

return new Response("<!DOCTYPE html>" + markup, {

status: responseStatusCode,

headers: responseHeaders,

});

}

Sitemap

To generate sitemap, @balavishnuvj/remix-seo would need context of all your routes.

If you have already created a file to handle all root routes. If not, check above

Add config for your sitemap

import { EntryContext } from "remix";

import { generateSitemap } from "@balavishnuvj/remix-seo";

type Handler = (

request: Request,

remixContext: EntryContext

) => Promise<Response | null> | null;

export const otherRootRoutes: Record<string, Handler> = {

"/sitemap.xml": async (request, remixContext) => {

return generateSitemap(request, remixContext, {

siteUrl: "https://balavishnuvj.com",

});

},

};

export const otherRootRouteHandlers: Array<Handler> = [

...Object.entries(otherRootRoutes).map(([path, handler]) => {

return (request: Request, remixContext: EntryContext) => {

if (new URL(request.url).pathname !== path) return null;

return handler(request, remixContext);

};

}),

];

generateSitemap takes three params request, EntryContext, and SEOOptions.

SEOOptions lets you configure the sitemap

export type SEOOptions = {

siteUrl: string; // URL where the site is hosted, eg. https://balavishnuvj.com

headers?: HeadersInit; // Additional headers

/*

eg:

headers: {

"Cache-Control": `public, max-age=${60 * 5}`,

},

*/

};

Robots

You can add this part of the root routes as did abovecheck above. Or else you can create a new file in your routes folder with robots[.txt].ts

To generate robots.txt

generateRobotsTxt([

{ type: "sitemap", value: "https://balavishnuvj.com/sitemap.xml" },

{ type: "disallow", value: "/admin" },

]);

generateRobotsTxt takes two arguments.

First one is array of policies

export type RobotsPolicy = {

type: "allow" | "disallow" | "sitemap" | "crawlDelay" | "userAgent";

value: string;

};

and second parameter RobotsConfig is for additional configuration

export type RobotsConfig = {

appendOnDefaultPolicies?: boolean; // If default policies should used

/*

Default policy

const defaultPolicies: RobotsPolicy[] = [

{

type: "userAgent",

value: "*",

},

{

type: "allow",

value: "/",

},

];

*/

headers?: HeadersInit; // Additional headers

/*

eg:

headers: {

"Cache-Control": `public, max-age=${60 * 5}`,

},

*/

};

If you are using single function to create both sitemap and robots.txt

import { EntryContext } from "remix";

import { generateRobotsTxt, generateSitemap } from "@balavishnuvj/remix-seo";

type Handler = (

request: Request,

remixContext: EntryContext

) => Promise<Response | null> | null;

export const otherRootRoutes: Record<string, Handler> = {

"/sitemap.xml": async (request, remixContext) => {

return generateSitemap(request, remixContext, {

siteUrl: "https://balavishnuvj.com",

headers: {

"Cache-Control": `public, max-age=${60 * 5}`,

},

});

},

"/robots.txt": async () => {

return generateRobotsTxt([

{ type: "sitemap", value: "https://balavishnuvj.com/sitemap.xml" },

{ type: "disallow", value: "/admin" },

]);

},

};

export const otherRootRouteHandlers: Array<Handler> = [

...Object.entries(otherRootRoutes).map(([path, handler]) => {

return (request: Request, remixContext: EntryContext) => {

if (new URL(request.url).pathname !== path) return null;

return handler(request, remixContext);

};

}),

];